What will really change for us as humans in our dealings with technology and

machines

In our understanding, technology is a tool, like a spade or a knife. Steve Jobs also had this image in mind when he spoke of the computer as a bicycle for our minds. But in many respects, this image has long since ceased to apply to technological systems such as CRM (customer relationship management), a workstation in a modern manufacturing process, or AI and robotics.

Yes, they still exist, tools such as saws, cordless screwdrivers and forks, with which people can do something faster or better, that we would not have been able to do without these tools. We see these tools as real additions or extensions to our abilities! And indeed they are.

Technical systems reduce the human scope of action

What changes when we turn individual tools into a technical system such as a CRM or a production line in a factory? People will then only do the things that cannot yet be done by the technical systems. With this technological progress, we are slowly but surely reducing the scope of competence and action for pilots in the cockpit, doctors in our western healthcare system or factory workers. This is because the relevant knowledge is contained in the overall process and we want it to be scaled, highly automated, and reliable, i.e. efficient. Preferably without the disruptive and often unreliable human being.

Economies of scale and efficiency have shaped our world view

But what have we as humanity, as engineers, made of this?

First we created tools with which a single person can do things, or with which he can do things faster (for example, hammers, lawnmowers or excavators).

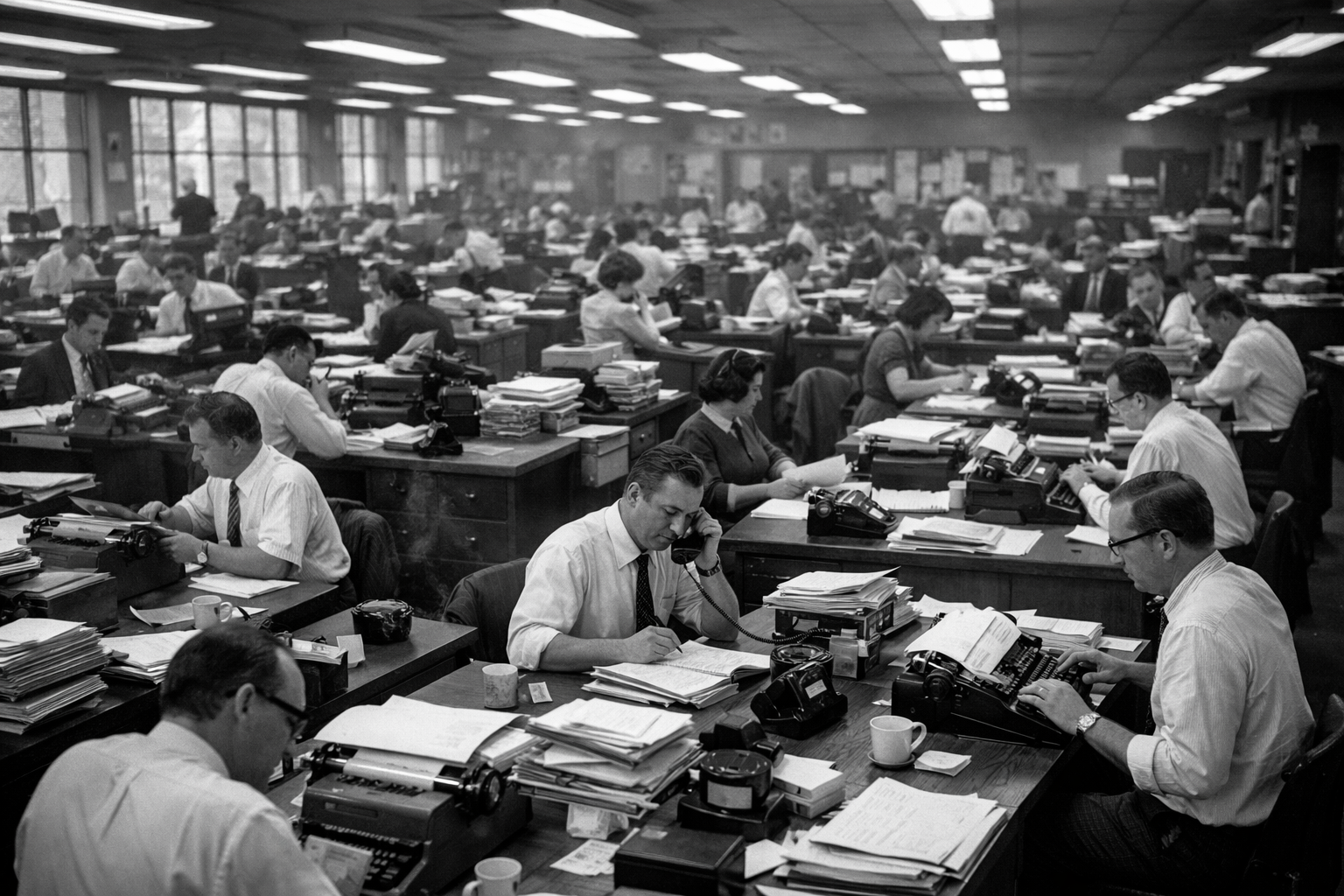

Then we created systems with which we can get groups of people to do much more in less time than would have been possible with individual tools – much more reproducibly, with equally good quality and on a larger scale. Examples of this are our factories, the railroad as a system, schools and hospitals.

And so the gradual development went hand in hand with the fact that we humans only carry out the remaining activities that cannot yet be performed by machines. The installation of doors in car production, for example, is still done by human teams today. The robot doesn’t exist yet, but we are working on it.

The tragic thing is that the same paradigm also applies to the people who plan the factory. Even CAD planners or production engineers only do the part as humans that a planning or simulation tool cannot do yet.

Today, nobody can escape this efficiency system, which is so tragic for us humans!

Then Silicon Valley came along and created a new paradigm

In the early 2000s, people in California invented a new paradigm for the consumer world. They designed digital products and services that performed a task and felt like tools that we could use like a hammer or a fork. First Google search and a free mail program, then more complex things like buying books online, and next e-commerce or online travel bookings.

The most important innovation was that the new products and services were not integrated and we could only ever take a small step with the new digital tools in the primarily non-digital world. Then we humans were once again the interface to the physical world, the millions of adapters from Silicon Valley to reality. This is how we have helped them to digitize the world in recent years. We were the interfaces from the digital to the real world.

For over 20 years, Silicon Valley has built the least inclusive – and therefore not people-centric – services. In the meantime, the big tech companies have turned several billion users and more than 60% of the world’s population into customers of their systems.

This is brutal scaling, in which the individual user is now just an infinitely small wheel. Thanks to social media, AI and data networking, machines in the consumer sector now “understand” individual people so well and see through their mindsets so efficiently that they become the plaything of algorithms.

Tragic.

The printing press as a technological memorial

The last huge upheaval in knowledge storage and distribution before Google search was the printing press. The printing press created forms that are still established today, such as newspapers and books, libraries as repositories of knowledge. As children, we grew up in a system that seemed stable. There were universities, editors, editorial offices, publishing houses – a system for stabilizing knowledge, opinions and their dissemination.

Before book printing brought enlightenment, rationality and more knowledge to more people, as well as stabilizing elements, it first made the world more insecure and unstable. The work “Malleus Maleficarum”, published in 1487 by Heinrich Kramer and Jacob Sprenger, served as a kind of handbook for the identification, persecution and punishment of witches. This allowed the persecution of witches to be standardized and scaled across Europe.

This is roughly where we are today with social media, nudging, our computer games and large language models.

The question is: can we move into a positive AI and robotics era without spending too many years burning witches again?

Why don’t we recognize the positive disruptive possibilities of AI and robotics?

There are two absolutely positive things that we talk far too little about when it comes to AI and robotics:

- How will AI change the way we interact with machines?

- What does it mean when information-processing machines, i.e. computers, will have bodies and will see, hear and understand the world?

I can only recommend everyone to have their own experiences with LLMs and try things out. The interfaces of ChatGPT, Perplexity or Claude do not have a search slot à la Google, they are dialog tools. LLMs need context. They are not looking for factual knowledge for us, they are not there to answer the question of where the tallest building in the world is.

How will AI change the way we interact with machines?

They have no opinion, but can try to recognize the user’s own intentions and will then reinforce them. The term “prompt engineering” is completely inappropriate to understand that the machines give you input from almost all areas of knowledge. We have access to methodologies, ontologies and semantics from almost all areas of human knowledge. We just need to understand how we can access this knowledge iteratively through the task, context and questions in order to gain completely new perspectives on seemingly familiar facts.

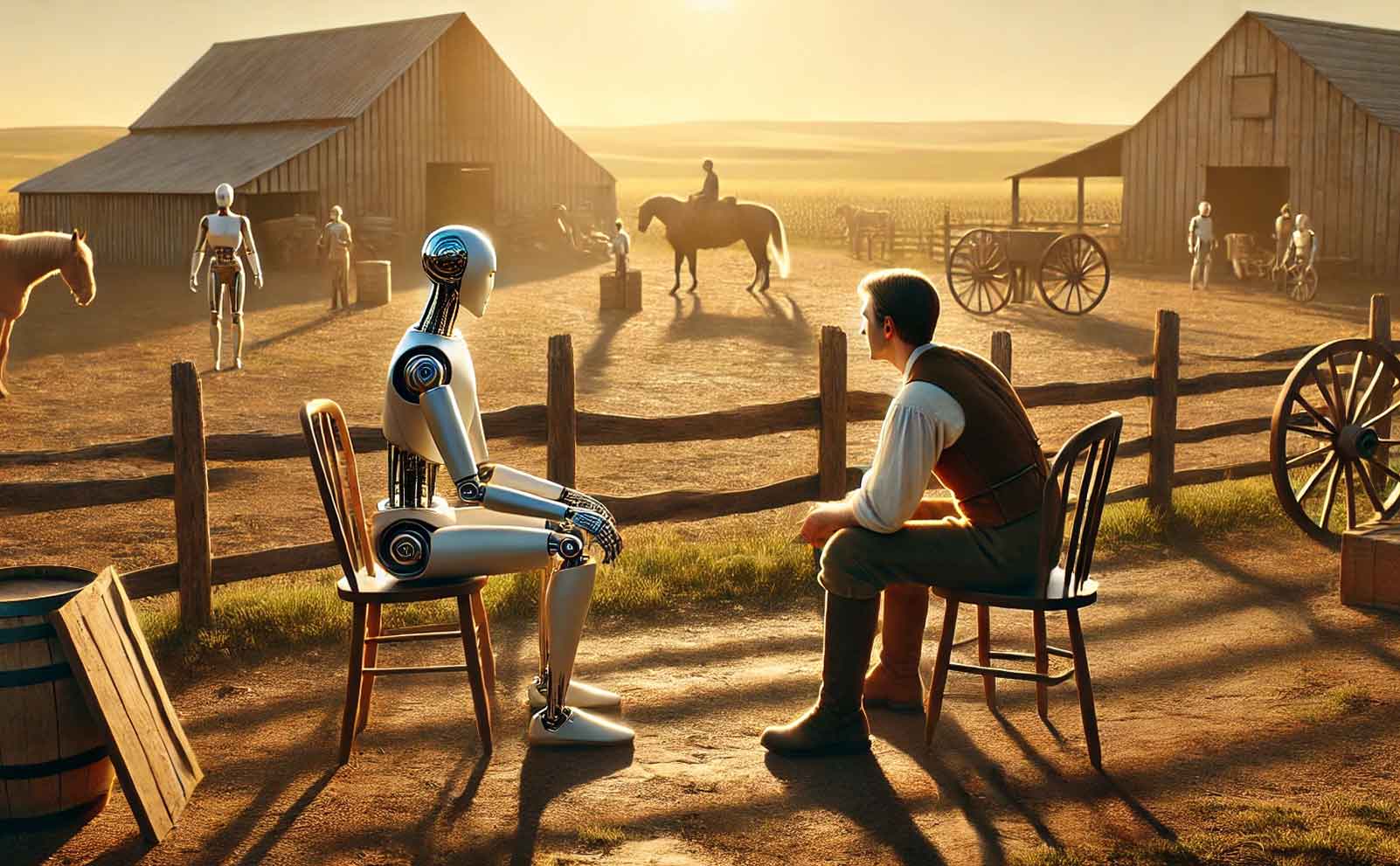

In this knowledge dialog, we are in effect sparring, coaching – because humans are designed to learn, understand and create in dialog. And it is precisely in such dialogs that people can live out and use their consciousness, their will to want, their will to create. LLMs do not have this level, even if they can now also reason and argue.

But we humans give them the sense of action, the relationship to the world. And this brings us full circle: the machines are once again a tool, a real bicycle for the mind. We just have to recognize it and use it!

We will (have to) get used to dialog with machines!

Today, preparation for an oral exam in a math course can be done entirely with LLMs. This starts with the creation of exam questions, and includes the entire exam dialog through to feedback, grading and suggestions for improvement. It is also possible to prepare complex meetings for almost any role and industry, with analysis of documents, taking the positions of various participants, a recommendation for procedures and coaching on how to handle objections in your own argumentation.

We just have to learn to use the machines for ourselves. To do this, we have to enter into a dialog with the machine, or more precisely with the LLMs.

It is no longer about static knowledge, knowledge trees or solutions for prefabricated tasks. It is about applying methodological expertise that can be called up almost at will to our personal and individual situations.

It is about conducting a dialog that we shape as humans, in which we as humans set the pace. We let the machine make suggestions, we choose from alternatives and thus take on the active role again as a human being. Never before in the history of mankind have we been able to interact with machines in this way.

It is time for us to free ourselves from the slavery of the systems we have created so far and regain our autonomy in dialog with the machines.

And now these machines are getting bodies

It’s a revolution that is happening quietly because nobody is talking about it. A robot 1.0 in a production process, which we had to tell exactly what it should do down to the smallest detail, is now becoming a “self-aware” robot.

In future, it will no longer take five years to set up a new production line for a new car model. We will be able to explain subtasks to the machines in dialog. They will have activities such as collision avoidance and many other basic functions such as welding, picking or position recognition as a basic function that will no longer have to be individually and laboriously programmed.

Machines learn to move in human spaces

Humanoid robots are just learning to open doors, climb stairs and find their way around an apartment. They are beginning to perceive their surroundings by labeling objects and thus classifying them. They will not only be able to orient themselves in space, but will also have self-awareness via sensors that use the results of their own actions as feedback impulses.

Humanoids are the logical consequence of combining LLMs with machine bodies. They are the logical consequence for machines that can move and interact in an environment made for humans, with stairs suitable for humans, windows and doors fitted for humans.

We will also be able to converse with these humanoids. They will be able to acquire knowledge from us.

We can hope that in the foreseeable future we will see humanoids that will free us as humans from having to take on the remaining tasks that cannot be performed by machines, robots or humanoids.

We can only hope that we, as a generation, will experience all these positive and new aspects of dealing with machines and not have to overcome a period of witch-hunts first!